Concurrency basics

If you are confused when you hear your colleagues talking about parallel "this" and parallel "that", then this article is for you! Get ready and let's get started ^^

The basics

Let’s imagine that you have a nerd party with your smart friends and you decide to play chess with them.

1st scenario (no concurrency)

You play a match with one of your friends and only AFTER that you play with another friend. The right one says: “Oh, man! I don’t wanna sit here and wait for 1 hour”. He is not happy! Houston, we have a problem!

2nd scenario (concurrent)

You play like a pro: you make one move and while the first friend is busy with his move, you visit the other friend and make the next move. Both friends are happy and you are the hero of the party!What is concurrency?

Concurrency is a way of making two or more things progress simultaneously.In practice it means that a worker (or a player like in the case before) knows how to switch between the tasks and make it appear happening at the same time.

Ok, but what about computers?

The idea is basically the same. If you imagine having just a single core on your CPU, then it can only do one thing at a time. So if you have long running tasks they will block each other: all the tasks will waiting until the current one is finished.

In some cases it’s ok, but if you want your computer to be responsive to your actions or do multiple things ‘at the same time’, you need to apply one of the strategies below.

Your OS already does that…

If you imagine listening to music and writing an essay on your old PC (1 core CPU), you will probably have no problems with that. Why? Because your computer knows how to switch between those tasks and because it happens super fast, you have no notice of that. But in reality your CPU do only one thing at a time. You are just too slow the see it :)

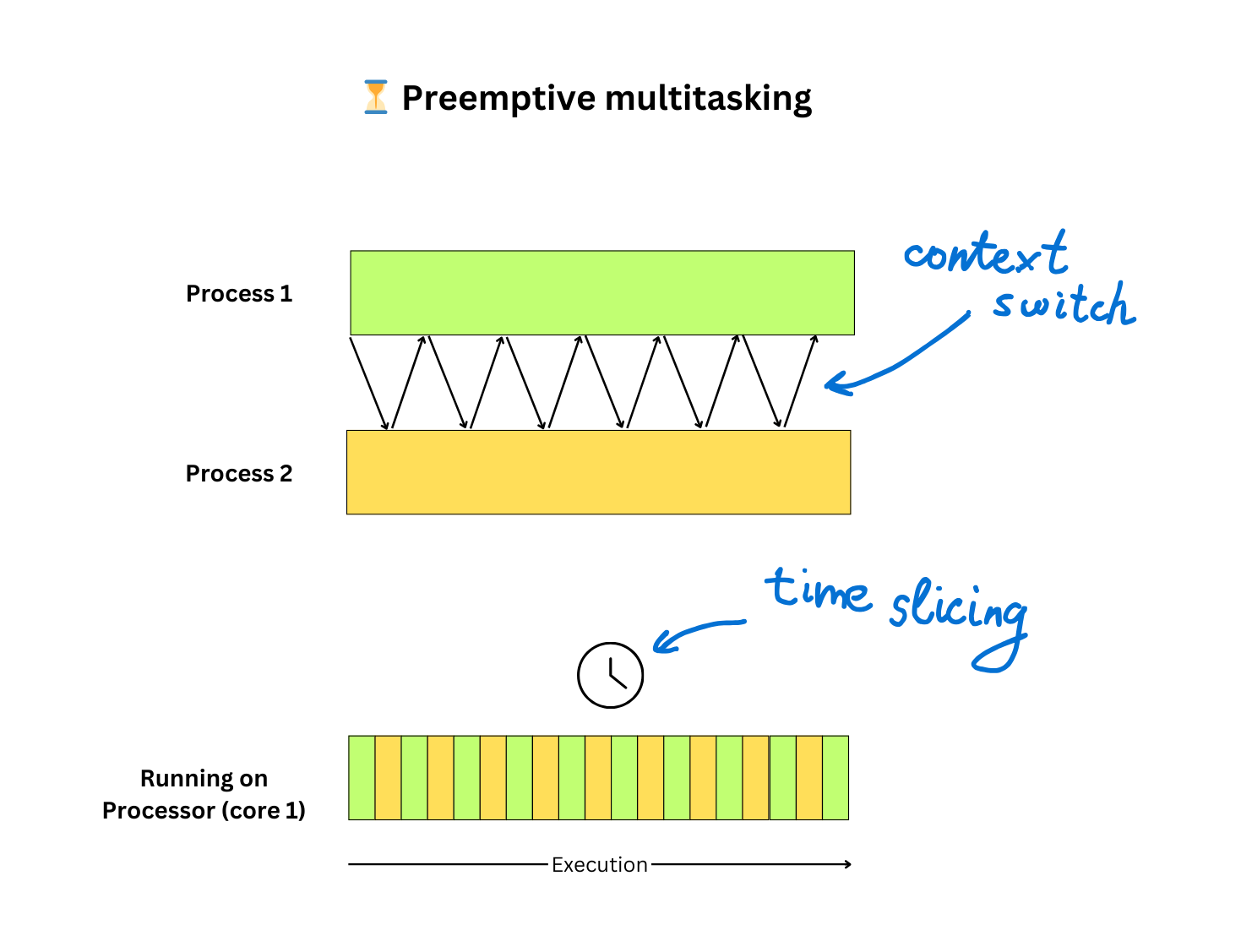

It is called preemptive multitasking and your OS knows how to do that properly. The OS has a built-in scheduler that acts like a real world manager, so it gives each task (aka process) a slice of time and makes the processor switch between them. The fact of switching between tasks is called context switch. Your CPU jumps between tasks. And it takes time! The more open tasks you have, the more your CPU struggles with these switches. But there are good news: if one of your processes freezes, other do not have any issues with that because they are independent of each other.

What about my own app?

If you want to develop your own application, you will probably face the same thing: doing multiple things at the same time. You can rely on the same mechanism of switching (Java threads, for example), but if you have too many things you wanna do simultaneously, you will have troubles. These independent threads are costly, both in terms of memory (RAM) and CPU. You need something better…

Let’s control it

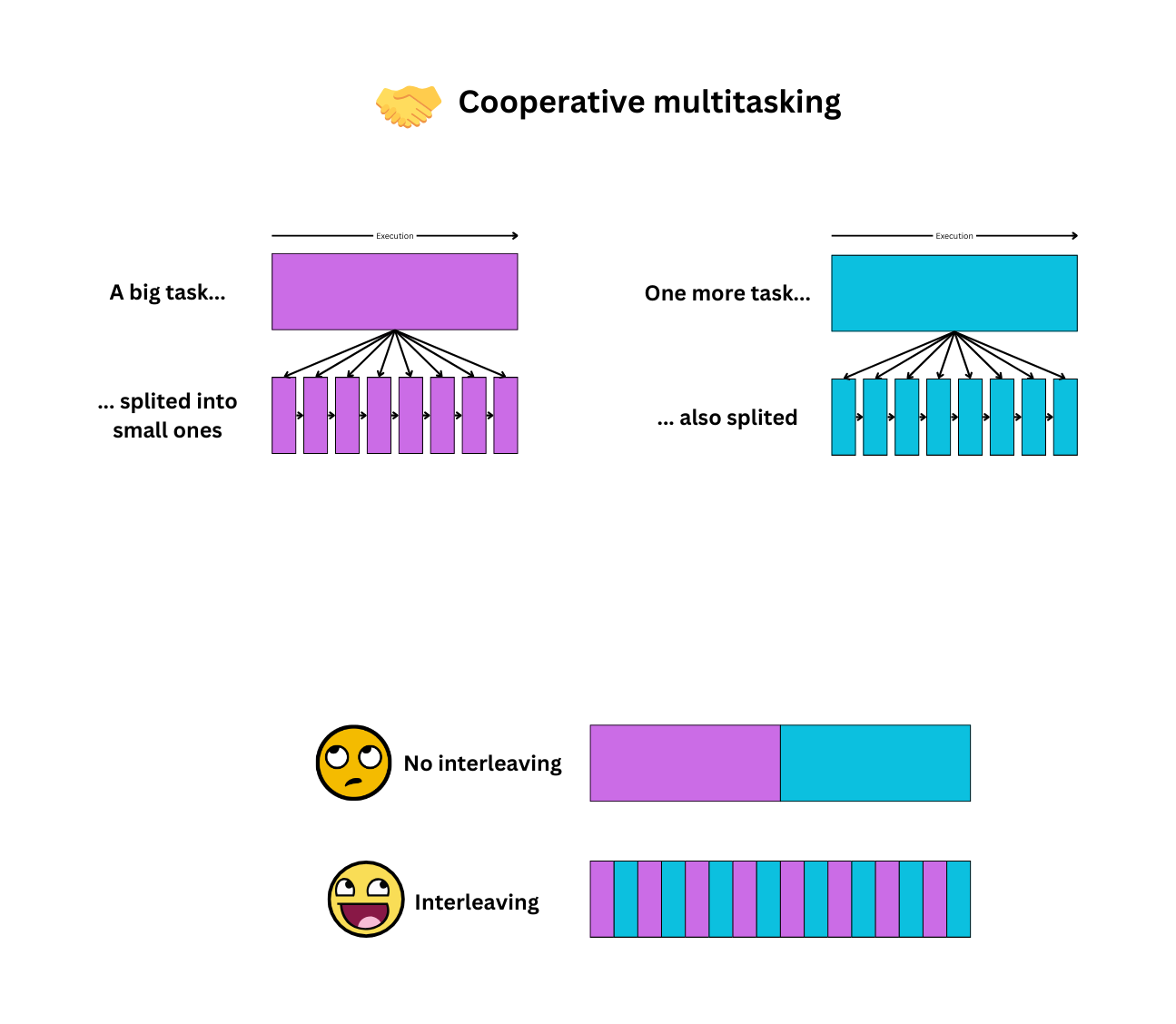

Let’s say we develop a stupid app that generates a million of numbers and write them into a file. What we could do is to split this long running task into small sub-tasks. So, instead of generating 1 000 000 numbers in one go, it will generate 1000 numbers 1000 times (1000 * 1000 = 1 000 000). What is the difference? Well, if we want to show a progress bar, we can just run both of these tasks right away. And the underlying system will interleave small sub-tasks so that both tasks will progress simultaneously. As a result you will see the progress bar moving (for each 1000). It’s called cooperative multitasking:

The ‘underlying system’ that I mentioned is some piece of app that we implement (of course we can use 3rd party libraries). It’s not controlled by the OS, but rather by the client code that we write. This approach is really good at saving resources, because now we can even use one OS process (or thread) and have concurrency. So no context switches and no wasted memory!

I hope you enjoyed it!

See you later and good luck with concurrency in your daily life!